2024-02-19: using Vulkan to accelerate LLMs on AMD GPUs

Since the last post has been written, there has been a few interesting developments in the world of large language models.

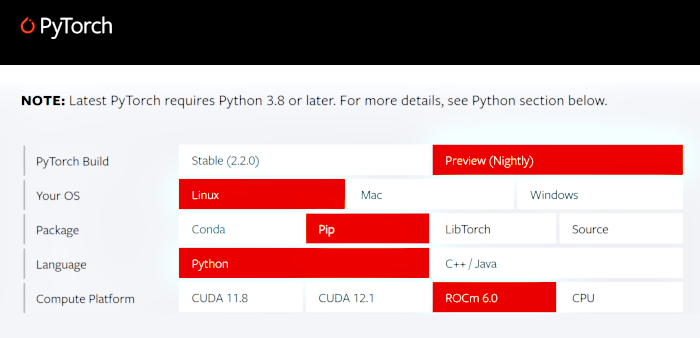

Notably, the industry wide support of AMD GPUs continues apace, with large frameworks like PyTorch even offering regular and up-to-date releases supporting the latest versions of ROCm as it rapidly reaches feature parity with CUDA.

Historically the "red team" track record was somewhat mixed, with only the top and Pro-series GPUs supported, but as of ROCm v6.0, AMD has quietly enabled this on all cards on Linux.

So if you have one, consider giving it a shot; your mileage might vary of course, as many of these large language models and their respective datasets are quite big and may not necessarily fit in low-end consumer hardware.

Anyway, compared to even a few years ago, installation to take advantage of an existing Radeon card is straightforward.

Simply create a Python venv and then activate. Afterwards, install PyTorch with the desired Python wheel:

python -m venv ~/python_venv

source ~/python_venv/bin/activate

pip3 install --pre torch torchvision torchaudio --index-url \

https://download.pytorch.org/whl/nightly/rocm6.0

All done, now you can spin up HIP llamas with ROCm!

Actually many applications and frameworks support AMD now, some of the more interesting ones include:

- llama.cpp - a project to run LLMs using C/C++

- TensorFlow - the popular machine learning framework

- ZLUDA - an emulation library to run compiled CUDA applications on AMD GPUs

On that note, an exciting update to the llama.cpp is the recent Vulkan backend implementation project which attempts to "bridge the gap" and allows pretty much any device with Vulkan shaders to run a LLM and on nearly any modern operating system.

Potentially both Mac or Windows developers could take advantage of this to run LLMs faster; as could programmers on more compact or embedded platforms, such as Android phones or the newer variants of the Raspberry Pi.

A more interesting aspect of the Vulkan backend is that GPU shader architectures are created with parallelization in mind, potentially allowing cost-efficient customer hardware to run large language models with a greater number of tokens per second.

So with that in mind, it is worth testing this concept to determine how good an idea this might be.

Two very interesting and popular models in the AI developer community, that are also not-to-large in terms of parameters, are as follows:

- OpenHermes 2.5 - a data-rich varient of the excellent Mistral 7B model

- Phi 2 - an attempt by Microsoft to create a powerful yet super lightweight 2.7B model

For this benchmark, two simple prompts were selected:

Write me a python function that can read a CSV file into a Pandas DataFrame

Tell me about the planet Mars

The command used to start the llama.cpp server instance is below, where XX was the number of layers to defer to the GPU via the Vulkan backend:

cd llama.cpp

./server -m /path/to/llm_models/name_of_model.Q8_0.gguf --n-gpu-layers XX

Since running the full LLM model itself on budget hardware is unlikely to be desirable, 8-bit quantized versions of these models were used. Quantized models are slightly lower quality in their responses but are somewhat faster and also offer a lower memory footprint; allowing them to be ran on CPUs alone.

The results are presented below, with the models themselves subdivided into 28 pieces and ran in parallel to generate the tokens.

The scenarios begin with all 28 layers being ran on the AMD Ryzen 7600X CPU and gradually with more layers added to the Radeon RX 7600 GPU used in this benchmark.

For each set of results, the prompt used during the benchmark is denoted.

Write me a python function that can read a CSV file into a Pandas DataFrame

| GPU Layers | Phi-2 | OpenHermes 2.5 |

|---|---|---|

| 0 | 13.74 tokens/second | 5.63 tokens/second |

| 8 | 15.12 tokens/second | 6.62 tokens/second |

| 16 | 17.56 tokens/second | 8.02 tokens/second |

| 24 | 21.66 tokens/second | 10.89 tokens/second |

| 28 | 25.71 tokens/second | 13.29 tokens/second |

Tell me about the planet Mars

| GPU Layers | Phi-2 | OpenHermes 2.5 |

|---|---|---|

| 0 | 13.41 tokens/second | 5.62 tokens/second |

| 8 | 14.41 tokens/second | 6.65 tokens/second |

| 16 | 17.64 tokens/second | 8.00 tokens/second |

| 24 | 21.51 tokens/second | 10.75 tokens/second |

| 28 | 26.06 tokens/second | 12.35 tokens/second |

While these are admittedly only a handful of data points, I think there are some interesting conclusions here:

- both small models (2.7B) and larger models (7B) seem to benefit from offloading their layers to a GPU

- note the jump in performance going from 24 to 28 layers; it seems that offloading all of the layers of a model onto the GPU yields a much greater jump in tokens per second

- GPU offloading onto Vulkan shaders is still in the early days, at some later time, the performance gains may continue to improve

The sheer number of tokens per second yielded is somewhat compelling and exciting. Since it may hint at much better performance over the next few years, and in turn, less reliance on Nvidia GPUs as the main method of running LLMs.

With that said, the resources needed to train or fine-tune models will likely remain high for quite a while. This is due to the nature of architecture and also the incredible size of the available datasets.

Finally, in the long-term future, as compact and small-factor devices such as phones improve and gain greater graphical capabilities then it is very possible to imagine them running models with many billions of parameters.