2018-08-31: taking kubernetes for a spin - a short primer

In the realm of modern software development, web based applications are often key to the growth and success of a company. In order to meet the demands of rapid feature implementation, a number of frameworks have appeared to allow developers to quickly create whatever is needed and replace or remove other elements with ease.

One unfortunate consequence of this is that quite a lot of these technologies were done under the assumption that CPU speed or efficiency or parallelism would continue for many more decades. In recent times the limits of silicon transistors and photolithography mean that on-going speed improvements are restricted to multi-threading, either on multi-processor CPUs or clusters of GPUs.

With this in mind, several attempts have been made to make code libraries which take advantage of these clusters. Another approach is to horizontially scale via virtualization and containerization.

Containers do have limits, mostly related to performance and occasional memory corruption, yet they can be useful since they have many unique aspects. They can be more-or-less fully separated from the main operating system and are able to be near-perfect clones of whatever instance they are derived from.

A recent application of note is docker, a containerization app that allows developers to spin up quick instances to develop programs with litte to no risk to the OS, or even test multiple Linux distros or versions of a distro, should the developer require this.

Since the inception of docker, Google had been attempting to extend this functionality of the containers to allow web applications to have better scalability and durability. The end result is Kubernetes, often abbreviated as k8s. From Wikipedia:

Kubernetes is an open-source container-orchestration system for automating deployment, scaling and management of containerized applications.

Essentially, k8s is a tool to assist with handling a large cluster of instances, for one or more applications or servers. Specifically, this does differ from docker in a few interesting ways:

- scale directly based on CPU usage and availability

- automated rollout and rollback of instances, in the style of subversioning or staging systems

- orchestration; allows mounting of remote storage via providers such as Google Cloud or AWS

- self healing, it can attempt to replace or reschedule applications or instances that fail

- uses internal private IP addresses, negating the need for complicated network topologies

- utilizes templated configuration files, similar to Chef it allows a DevOps work environment

So if your web application is written with containerization paradigm in mind then it could plausibly scale well based on workload, have a limited ability to repair or recover from errors or corruption, and have some level of cloud redundancy.

While that does sound complex, it is more than a bit intriguing due to all of the services it can potentially replace. With that in mind, begin by ensuring the machine that will run Kubernetes supports hardware virtualization and that it is enabled in the BIOS and operating system.

Afterwards, install kubectl and minikube via the official documentation. Both are required to actually run the full suite, along with docker as well.

To summarize, kubectl allows management and fine tuning of a kubernetes cluster while minikube allows for easy spin up instances to make it easier during development.

With all of the components installed, start minikube via the following command:

minikube start

If this works, then this will start up a kubernetes server on the current server. In the event none of the typical VM drivers appear to function, set the VM driver to none, as shown below. Later on, consider installing VirtualBox and utilizing that to create instances if KVM is unavailable.

minikube start --vm-driver none

If that worked, then a minikube resource should have started, and now thus it can be managed via kubectl. To ensure it actually started, do a quick check like so:

kubectl get nodes

The output from that command will resemble something like the following:

NAME

STATUS

ROLES

AGE

VERSION

minikube

Ready

master

13h

v1.10.0

The minikube node is now available and can be adjusted and configured, both via web browser and via commandline interface. To enable the web interface, type the following command:

minikube dashboard

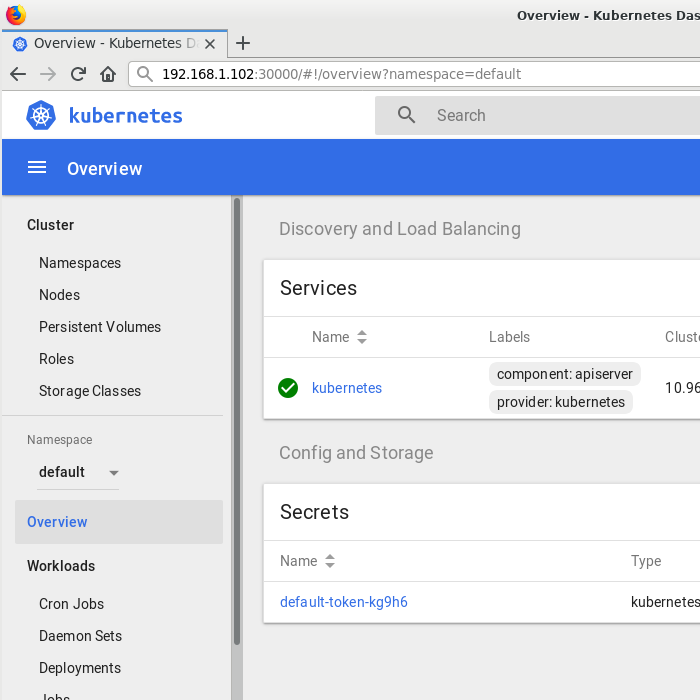

Upon doing so, the kubernetes interface will appear in the browser and allow access to the create pods and adjust configuration settings of the given minikube instance.

Feel free to browse through the various options and pages. Of note are the lists of nodes and pods on this k8s server. Since a minikube node is active, it is possible to spawn individualized containers on it, called pods. Typically these are designed to run a single command or microservice or child process.

To test out if the pod creation process is working, there is an image from the Google Cloud Container Registry labelled echoserver which mimics whatever is thrown at it. Useful for making the "Hello, world!" equivalent of a pod. To do so, take advantage of the use feature of kubectl to download the container image:

kubectl run hello-minikube --image=k8s.gcr.io/echoserver:1.4 --port=8080

Assuming this ran as intended, a pod will be created with the name hello-minikube, which can be viewed either via CLI or web browser. To obtain the list of pods via commandline, do the following:

kubectl get pods

Which yields output similar to the following:

NAME

READY

STATUS

RESTARTS

AGE

hello-minikube-6c47c66d8-2bhml

1/1

Running

0

52m

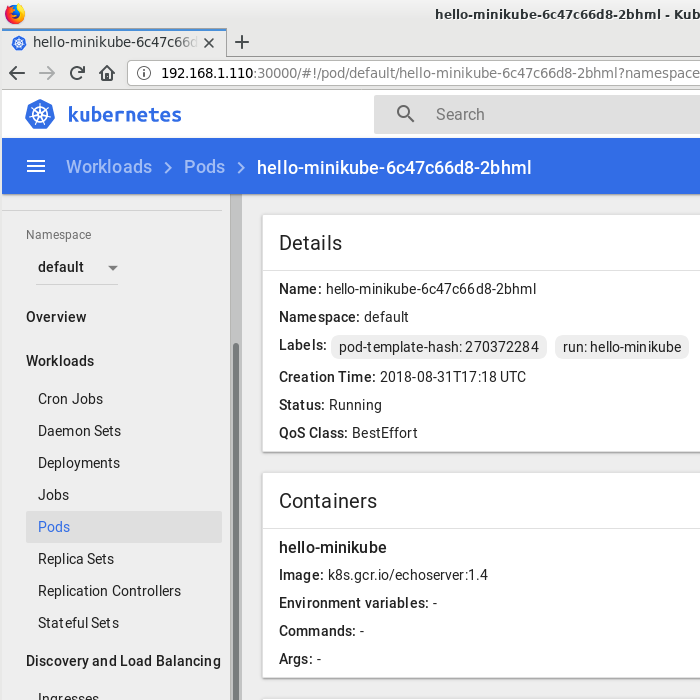

In the web browser, it can be found via the Workloads > Pods > hello-minikube-hex-timestamp. That page shows the node, status and the current quality of service, among other details:

If the status indicates that the pod is running, open the instance in a browser or using wget or curl to obtain a read-out of what values were passed to the pod. In this case the address is 172.17.0.4:8080, so the output should be something akin to this:

CLIENT VALUES:

client_address=172.17.0.1

command=GET

real path=/

query=nil

request_version=1.1

request_uri=http://172.17.0.4:8080/

SERVER VALUES:

server_version=nginx: 1.10.0 - lua: 10001

HEADERS RECEIVED:

accept=*/*

accept-encoding=identity

connection=Keep-Alive

host=172.17.0.4:8080

user-agent=Wget/1.19.5 (linux-gnu)

BODY:

-no body in request-

The pod seems ready to run, so attempt to send it a POSIX command and see what happens. As a number of images feature readme files in their root, go ahead and take a gander at it using cat:

kubectl exec hello-minikube-6c47c66d8-2bhml cat README.md

It should contain a brief description and changelog of the specific image.

# Echoserver

This is a simple server that responds with the http headers it

received.

Image versions >= 1.4 removes the redirect introduced in 1.3.

Image versions >= 1.3 redirect requests on :80 with

`X-Forwarded-Proto: http` to :443.

Image versions > 1.0 run an nginx server, and implement the

echoserver using lua in the nginx config.

Image versions <= 1.0 run a python http server instead of

nginx, and don't redirect any requests.

Other commands work as well, and some images even contain interactive shells that allow internal adjusting and development.

The pods themselves can be manipulated via supervisors called deployments which offer finer control over what happens on the k8s server. Deployments can be listed in roughly the same manner as nodes and pods.

kubectl get deployments

The minikube instance running should have started with at least one deployment, which in turn is tied to that one pod. Deployments are rather simple and derive from the yaml script that controls their functions. To change it, use the edit command like so:

kubectl edit deploy/hello-minikube

In a text editor the contents of the yaml script will be opened for editing. For the echoserver image, part of it looks like this:

# Please edit the object below. Lines beginning with a '#' will

# be ignored, and an empty file will abort the edit. If an error

# occurs while saving this file will be reopened with the

# relevant failures.

#

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2018-08-29T17:18:36Z

Suppose there was a need to add a small change to an image and then rollout to all of the pods handled by this deployment. Tweak the revision to "2" and the deployment will handle the rollout of change automatically, which should occur rather fast in this case since there is only one pod. To check the status of the rollout, use the following command:

kubectl rollout status deploy/hello-minikube

If that worked, a success message will be shown. If a failure occurred, consider rolling back all of the pods using the undo feature, like so:

kubectl rollout undo deploy/hello-minikube --to-revision=1

Setting revision numbers in that manner is good practice and allows rollout and rollback of versions of a pod, akin in philosophy to practices found in software development.

That handles some of the basics of using Kubernetes, however, there are a great many features that could use further explorating, such as the secrets feature that allows for encryption functionality throughout the node. These may be explored in some future post.